About

I am a Software Developer at VMware and have experience developing microservices, databases, DevOps, automation, and analytics.

I completed my Bachelor's (B.E.) in Computer Engineering at Sardar Patel Institute of Technology, Mumbai, India, in May 2020.

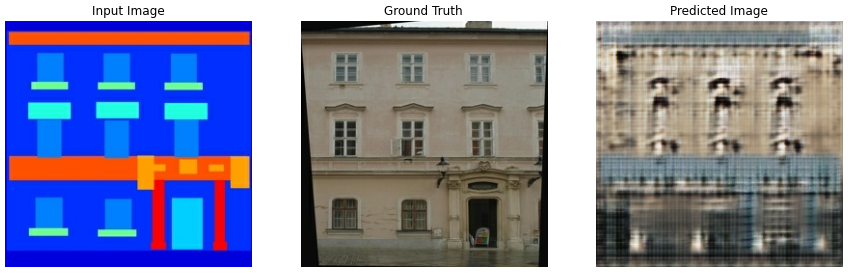

I am immensely interested in AI Research and have authored a couple of papers. Moreover, I have won a couple of hackathons themed more or less on AI.

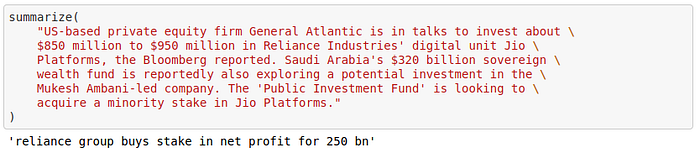

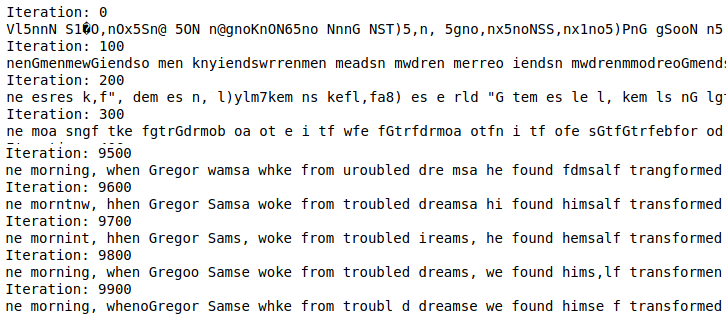

I blog about Machine Learning, Deep Learning, Reinforcement Learning, Natural Language Processing at Medium.

Please have a look at my resume for more information.